Intuitive Visual Modeling

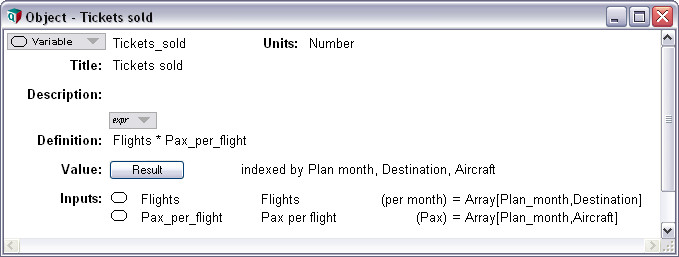

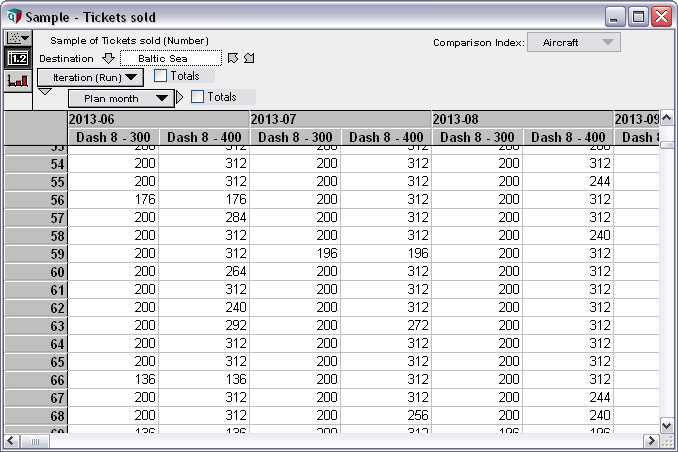

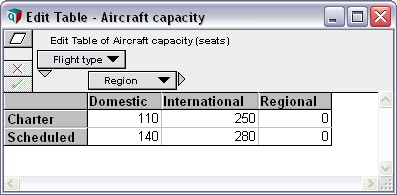

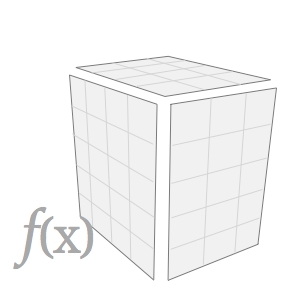

Multidimensional Calculation

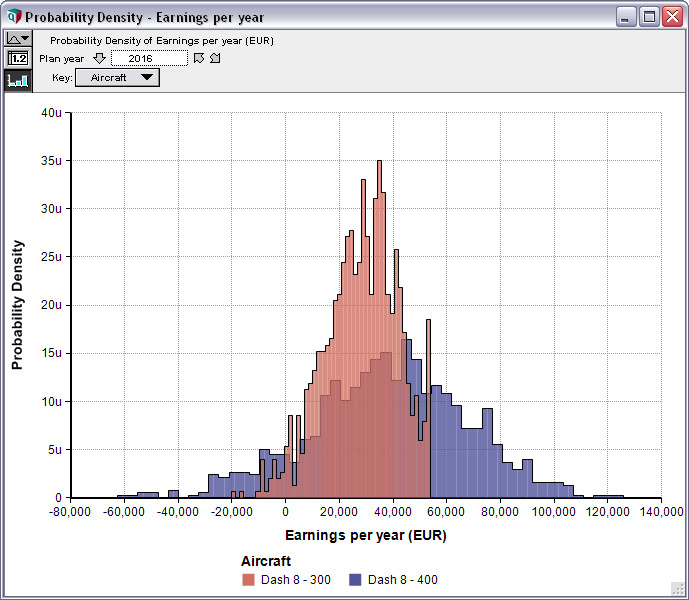

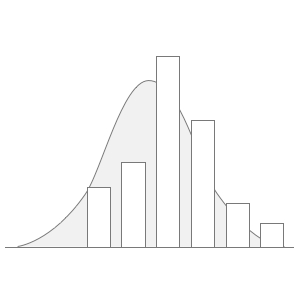

Monte Carlo Simulation

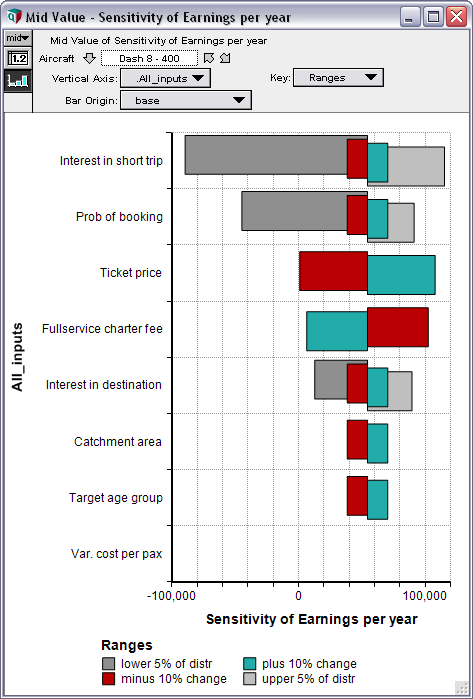

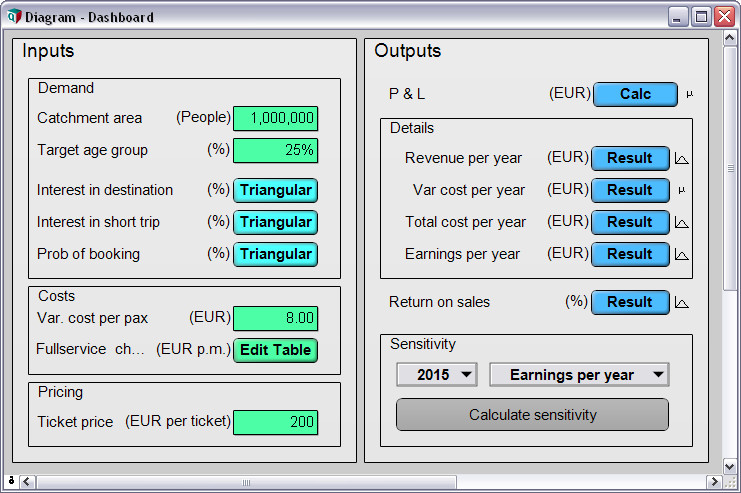

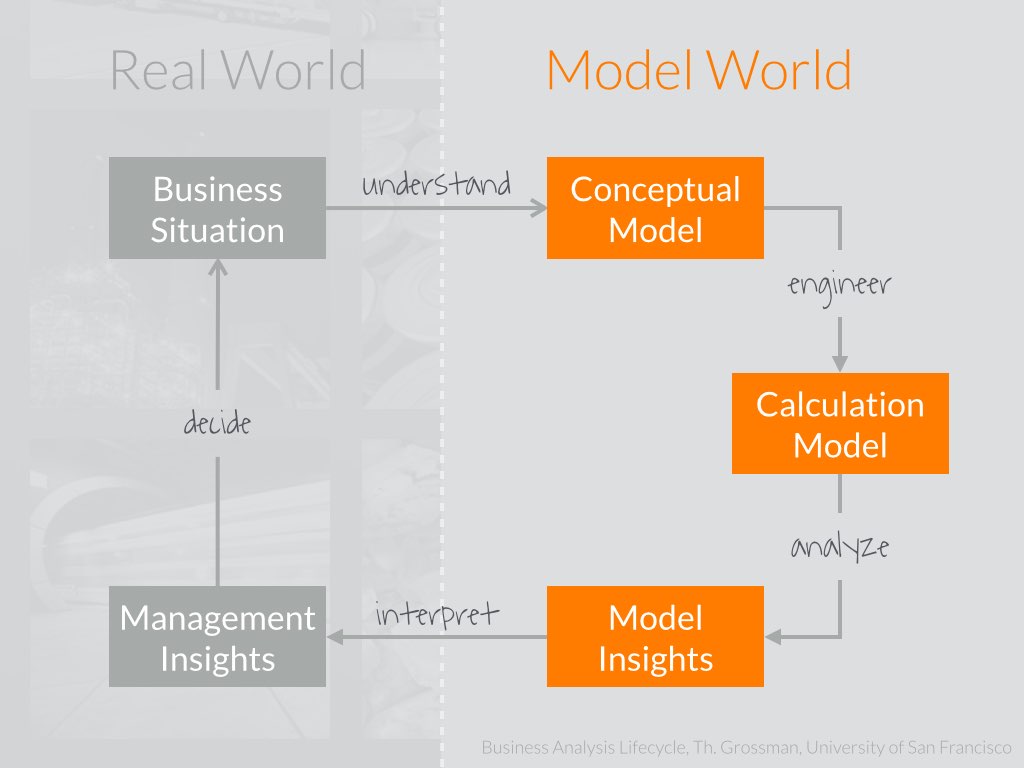

The ultimate purpose of a financial model is not to generate numbers based on a set of assumptions. It’s rather gaining insight into a business situation so that managers can make better commercial decisions.

Think of the model as laboratory where you can test your decision alternatives in a safe and cost-efficient way. In fact, for most business situations, building a financial model is the only way to test before taking action in the real world.

But, building the actual calculation model is only part of the story. Although Excel is quite good at “crunching numbers,” it provides almost no support for conceptional modeling or gaining insights.

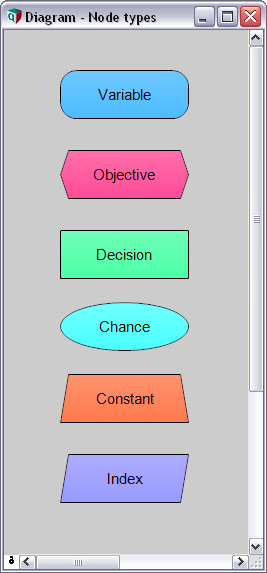

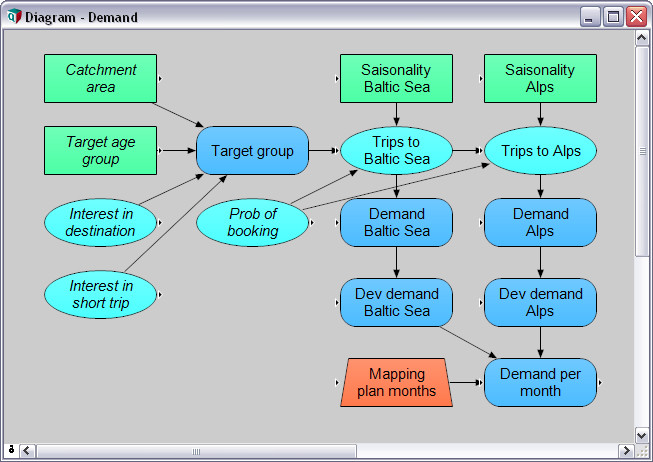

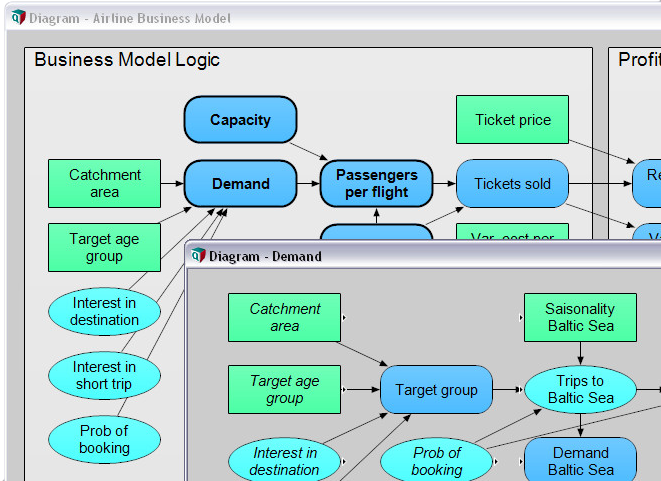

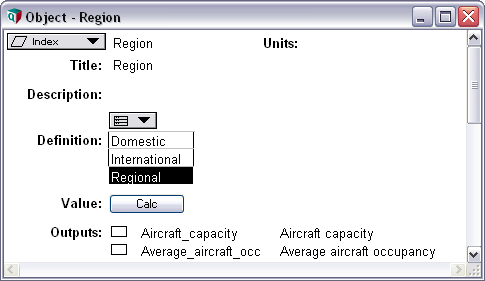

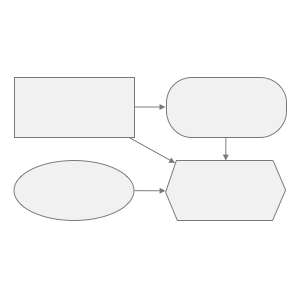

Analytica, however, provides just the right feature for every step along the “Business Analytics Lifecycle.” You can build your model the way you should do—from understanding the business situation and building a conceptional, quantitative model to filling in the most complex, multidimensional calculations to sensitivity and uncertainty analysis. Going back and forth the cycle is really a snap, allowing for a truly flexible and agile model development. You can easily change your model at any time—even late in the development—mostly without having to edit any formula at all.

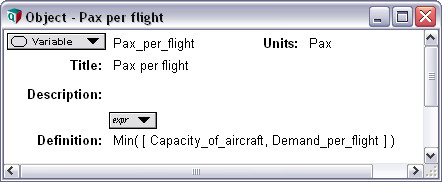

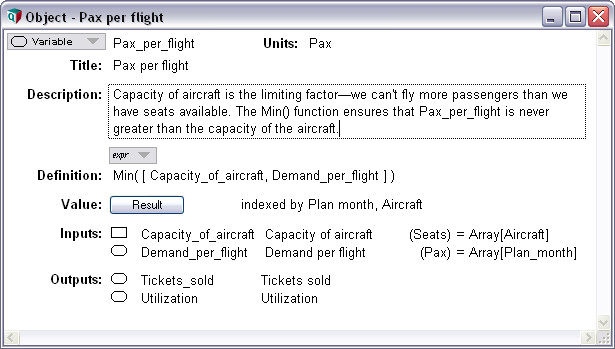

For financial modeling, the main problem of Excel and other spreadsheet is that they work at too low a level of representation. The grid deals with cells. You, the financial modeler, are—or should be—thinking about higher level entities, such as variables, influences, modules, hierarchies, dimensions and arrays, uncertainties, and sensitivities.

A modeling tool like Analytica that displays these entities and lets you interact with them directly is much more intuitive to use. It reduces the need for you to mentally translate between your “inner representations” and the one used by the software. Analytica makes it much easier to write, review, verify, explain, and extend models. It reduces the number of errors by preventing many kinds of errors from being made in the first place, and by making remaining errors easier to detect and fix.